DriveDreamer4D: World Models Are Effective Data Machines for 4D Driving Scene Representation

- Guosheng Zhao1,2

- Chaojun Ni1,4

- Xiaofeng Wang1,2

- Zheng Zhu1✉

- Xueyang Zhang3

- Yida Wang3

- Guan Huang1

- Xinze Chen1

- Boyuan Wang1,2

- Youyi Zhang5

- Wenjun Mei4

- Xingang Wang2✉

- 1 GigaAI

- 2 Institute of Automation, Chinese Academy of Sciences

- 3 Li Auto Inc.

- 4 Peking University

- 5 Technical University of Munich

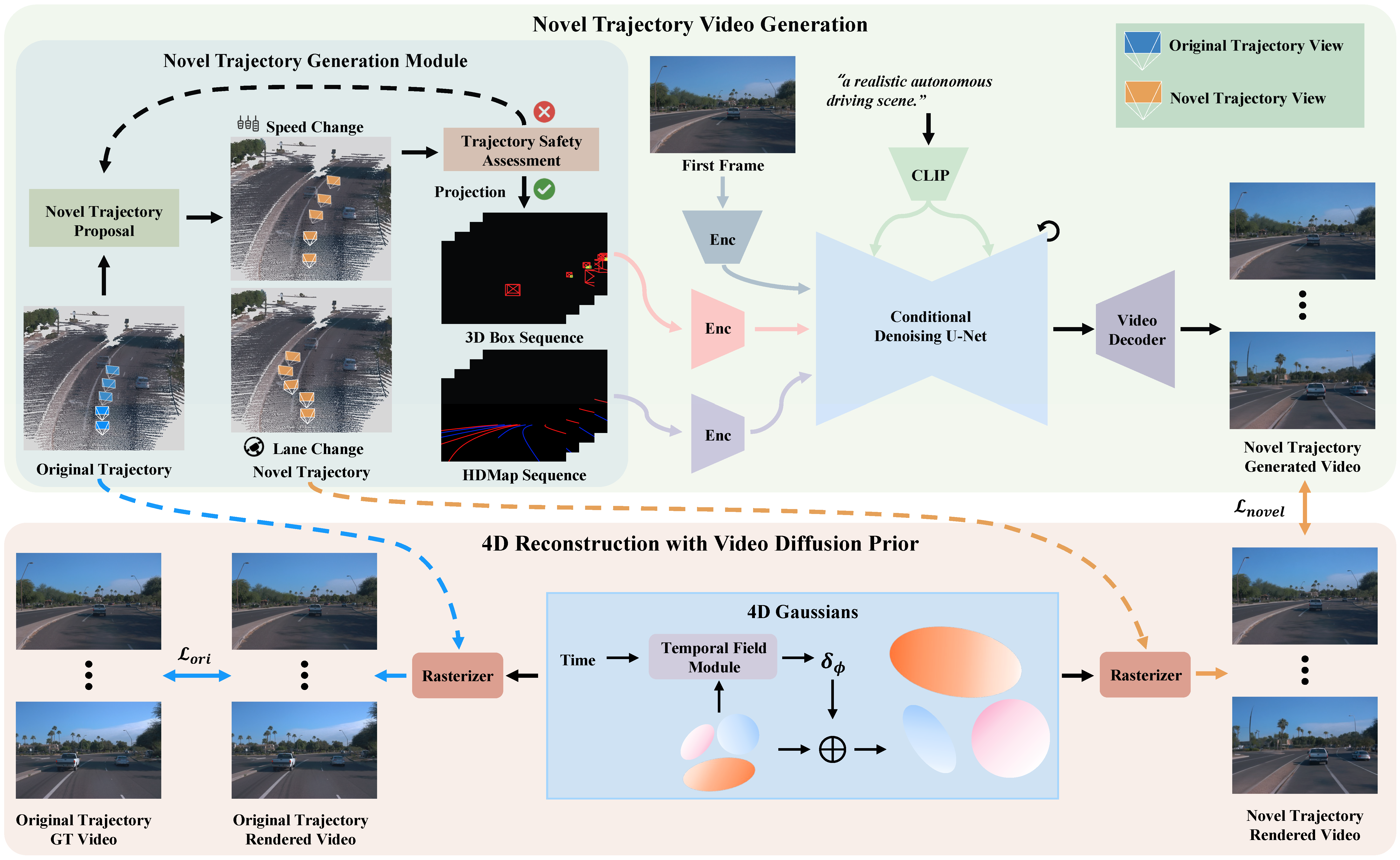

The pipeline of DriveDreamer4D

Abstract

Closed-loop simulation is essential for advancing end-to-end autonomous driving systems. Contemporary sensor simulation methods, such as NeRF and 3DGS, rely predominantly on conditions closely aligned with training data distributions, which are largely confined to forward-driving scenarios. Consequently, these methods face limitations when rendering complex maneuvers (e.g., lane change, acceleration, deceleration). Recent advancements in autonomous-driving world models have demonstrated the potential to generate diverse driving videos. However, these approaches remain constrained to 2D video generation, inherently lacking the spatiotemporal coherence required to capture intricacies of dynamic driving environments. In this paper, we introduce DriveDreamer4D, which enhances 4D driving scene representation leveraging world model priors. Specifically, we utilize the world model as a data machine to synthesize novel trajectory videos, where structured conditions are explicitly leveraged to control the spatial-temporal consistency of traffic elements. Besides, the cousin data training strategy is proposed to facilitate merging real and synthetic data for optimizing 4DGS. To our knowledge, DriveDreamer4D is the first to utilize video generation models for improving 4D reconstruction in driving scenarios. Experimental results reveal that DriveDreamer4D significantly enhances generation quality under novel trajectory views, achieving a relative improvement in FID by 32.1%, 46.4%, and 16.3% compared to PVG, S3Gaussian, and Deformable-GS. Moreover, DriveDreamer4D markedly enhances the spatiotemporal coherence of driving agents, which is verified by a comprehensive user study and the relative increases of 22.6%, 43.5%, and 15.6% in the NTA-IoU metric.

Comparisons

Rendering results in lane change novel trajectory

Rendering results in speed change novel trajectory

Citation

Acknowledgements

The website template was borrowed from Michaël Gharbi and Jon Barron.